LCNAF & Trie

Storing +11M unique LCNAF names in 50MB Trie data structure

Nov 12 2025

The core of this post is looking at a pretty niche problem but it spans across a number of interconnected interests including controlled vocabularies, data structures and web development within library data. In general library data could be summarized as being esoteric and old. Often data originated in a paper format many decades ago and through endless migrations exists with us today. This longevity and consistent(-ish) nature of its production result in really unique datasets that have inherent qualities. These unique qualities made me wonder if they could be used to work with it in interesting ways. Specifically in this case we are going to look at how we can represent the Library of Congress Name Authority File (LCNAF) in a non-traditional data structure and what applications that opens up.

LCNAF is one of those esoteric and old datasets. It originated with paper catalog cards and is used as an index of contributor names. Whenever a resource is cataloged at the Library of Congress (or other organizations that participate) a unique label is made for the contributor to the resource. If the name already exists then it is made more specific to identify that contributor. The rules have changed a little over the decades of course but the general feature of the dataset is still true resulting in a huge list of unique names. Nowadays most computer people would scoff at the idea of using unique labels as an index, that’s what identifiers are for. LCNAF does now use identifiers as well but that consistent practice of creating a unique label has continued from the beginning resulting in an index of over 11 million unique names. It is the uniqueness quality of the labels that made me think it could be represented in a more efficient data structure like a trie tree.

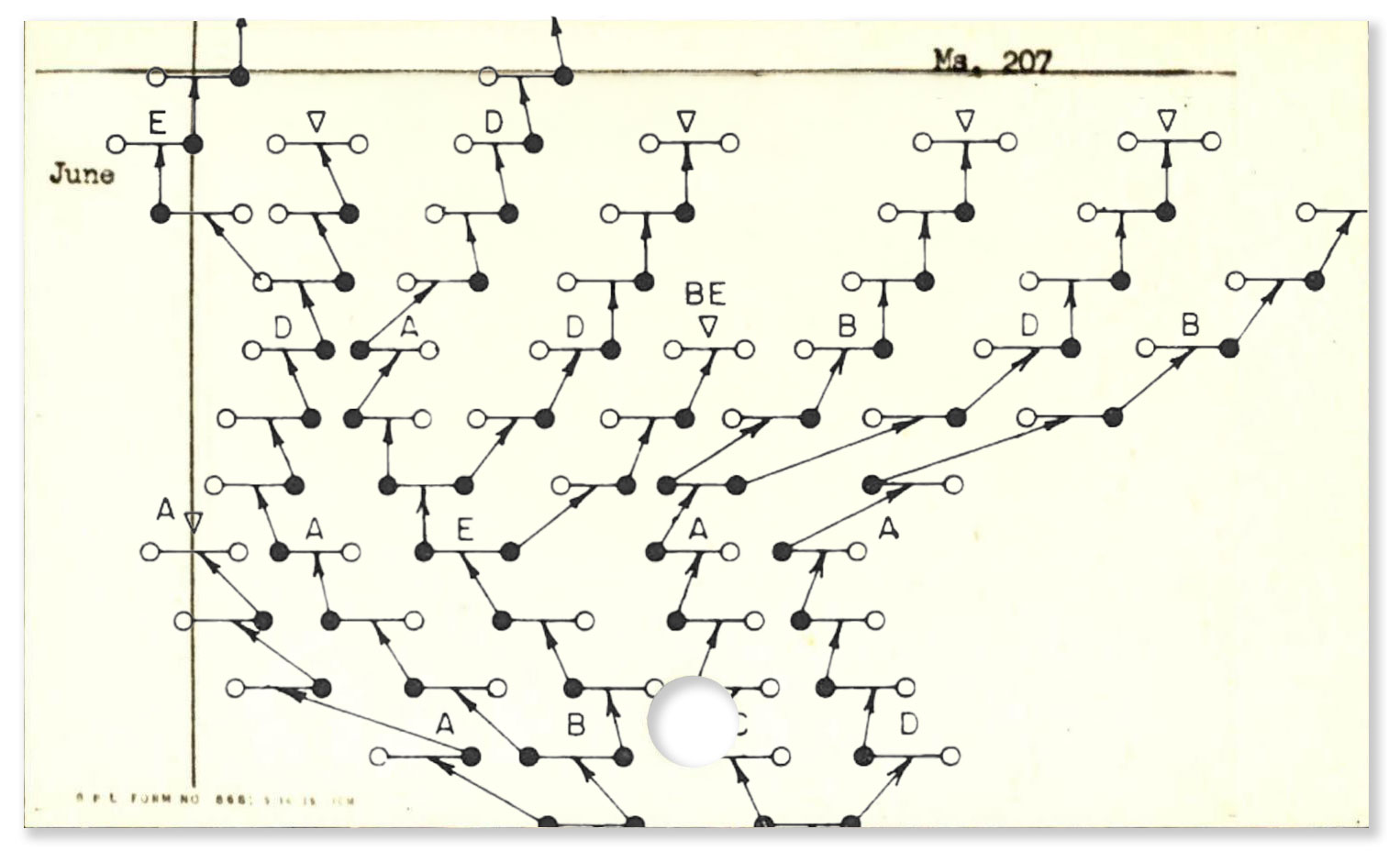

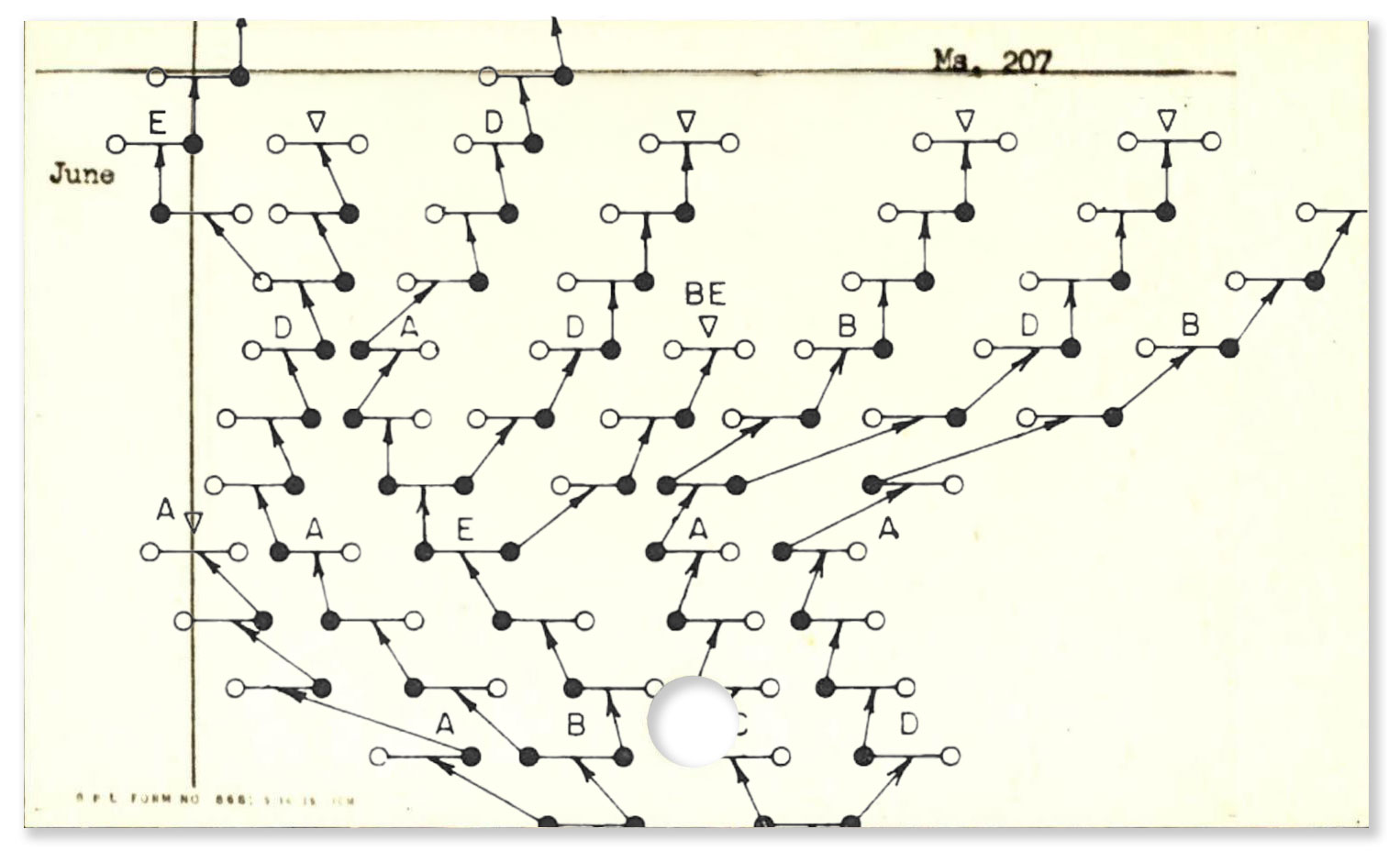

The trie is an interesting data structure concept designed specifically to efficiently store strings. It allows for strings that share sequential characters to be linked together removing the need to repeat characters that they share. The diagram to the right from the Wikipedia page shows how this works visually. The words “tea”, “ten” and “ted” all share the prefix “te” so why store that three times when you can store it once and link it to the three words. The goal here is to reduce the storage needed to keep the index in memory. If you get the index small enough you can do interesting things, like maybe you don’t need a database to work with this data, or maybe you could put the index into the web browser and do lookups client side. Of course ultimately the use cases revolve around a user looking up a name to see if it exists. The goal behind using the trie in this case then is to make the dataset more usable by requiring less infrastructure.

Trying different approaches with the dataset I found that I got better compression if I made the labels more likely to have shared prefixes. The more shared prefixes the less characters needing to be stored. To do this I normalized and alpha sorted the label, for example here is the authorized heading for Virginia Woolf and the conversion to an alpha sorted string:

The string normalization and sorting process followed these steps:

This works because even alpha sorted the string will likely still be unique but now the characters are arranged to better slot into the Trie data structure. And while there are thousands of normalized label collisions across the 11 million sorted labels (which in itself is kind of fun, finding all the name anagrams) the extra steps needed to keep track of them still is worth it for the compression saved. I found if I stored the 11M labels in a trie as-is without normalization the resulting file would be over 100MB, applying the sorting resulted in a 50MB file.

In the case of the Virginia Woolf heading we can see the number of other headings that share a similar prefix. Below for each line is the prefix and the number of headings that use that prefix as well:

Woolf, Virginia, 1882-1941 –> afgiiilnoorvw11124889 a - 9.8M af - 25K afg - 6,233 afgi - 2,324 afgii - 1,012 afgiii - 317 afgiiil - 115 afgiiiln - 49 afgiiilno - 22 afgiiilnoo - 8 afgiiilnoor... - 1

So all the way up until “afgiiilnoo - 8” we are saving a lot of space using the Tire to store the labels.

One drawback of the Trie format is that it is not meant to encode additional metadata about the string. You just have two pieces of data in the trie: the string and the id position of the string in the trie. And since we put this normalized string into the trie we lost the original label. Additionally in our case each label heading has a unique identifier called a LCCN, Library of Congress Control Number that is useful to get additional information or build a linked data URI identifier for use in cataloging. To get around this we have to make a lookup file. This file is just a huge array of LCCNs for the records who have a unique normalized string, if there are collisions we also have to store the original label to be able to disambiguate which label they are searching. The index in the lookup array (the position in the array) matches the trie position id, so we are using the trie id as the key to link the two data structures. In the case of collisions, for example these two headings:

Narke, Rob Borkan, R.E.

If we follow our normalization rules we end up with the same string: abeknorr

So we simply store the original label for both and we can compare them to what the user imputed to see which they are looking for. We don’t need to store the original labels for everything just in the cases where there is a collision. I also improved storage of the LCCNs by replacing an alpha prefix they have to a number map for each prefix which allows all the LCCNs to be stored as numbers instead of strings. And one final way to shrink this lookup file was to use the MessagePack serialization

The file size combined after GZiping both files (even though they are binary it still resulted in smaller files) is around 100MB total. That sounds large but it is really not that outrageous anymore these days. That is the equivalent of less than a minute of very high resolution video. At 100MB I feel it is pushing the upper limit of being too large, but we can now do something in the web browser using the full LCNAF index.

The first application I made using this process loads the trie data structure and lookup file and allows you to do an exact match of a heading to the LCCN. This is limited functionality for searching but really useful for reconciliation processes. A lot of existing library data does not have the LCCN identifiers in the record, just the label. This website allows you to load a MARC binary or XML and it will populate the identifiers into the subfield zero in certain fields where LCNAF names are used in the record. This works without sending the MARC data anywhere, it is done entirely in the browser. Try it out below or click the button to open it in a new window.

To stress test this I dragged in a 650MB MARC binary file to reconcile 480K records, it took four minutes and here is the result:

About 88% of the labels were able to be reconciled and assigned an LCCN, the site lets you “download” the modified MARC record which includes the subfield zero populated with the LCCN as a id.loc.gov URI. The “poor match” is a result of the situation when there are normalized label collisions. If there are two or more labels that when normalized are the same it keeps track of the original label in the lookup file. If a input value matches one of these multiple possibilities it does a simple Levenshtein distance calculation to judge which one of the stored original labels best matches the input value. This almost always works but sometimes there could be a coincidence that the normalized input string matches something but the Levenshtein check doesn’t find a good match.

The reconcile interface proves the concept of having a small trie file in the browser lets you do some interesting things within the confines of a web browser. But it is not a very friendly interface. You need to know the exact headings or be working with MARC records to do anything. This next interface sacrifices the max compression for usability. I built another trie but without the normalization, this means we don't lose the original string heading and we can do partial leftanchored prefix searches. So we can now search for "woolf v" and see all the headings that start with "Woolf, V" This also works due to the nature of how the headings are structured. The trie format is exact, case, punctuation and even white spaces matter. But due to the rules of how the heading is constructed I can have the interface try a number of permutations when the user enters "woolf v" it automatically tries "Woolf v", "Woolf, v", "Woolf V", "Woolf, V" etc. to find the results.

This interface does load about 135MB of data compared to the 100MB reconcile interface but is much more useful for instant search results across 11.6M names without a backend. You still need some sense of how the headings are constructed but does seem useful as a cataloging companion tool.

I also created some non-web based tools using this trie data. The first is an OpenRefine service you can run locally that you can then use as service in your OpenRefine tool. This allows you to reconcile names in a spreadsheet to LCNAF (exact match only) incredibly fast. Here is instructions on how to use it

The next is a simple API endpoint, this is if you want to write your own scripts that send in a LCNAF heading outside of OpenRefine. It is very simple to run and has one endpoint, documentation here

And finally I made a command line python script that you just give it the path to a MARC binary or XML file and it will enrich the subfield zero on 100, 110, 700, 710 fields. This is if you just have a MARC record you want to enrich in bulk, documentation

Code and Data can be found on GitHub.