Repotting Old Digital Humanities Projects

Two Test Cases

Jan 31 2020

Knowing how to stop doing things is an important skill to learn. Kind of like learning to say no to things when you don’t have the capacity to do them. I've seen requirements around sustainability and sunsetting, that is how to wind down or retire a project become more prevalent on grant applications and funding processes. I've also seen sunsetting become more of a topic around digital humanities and cultural heritage projects. For example this presentation and site by Jason Ronallo and Bret Davidson looking at why and how you might sunset an institutional website.

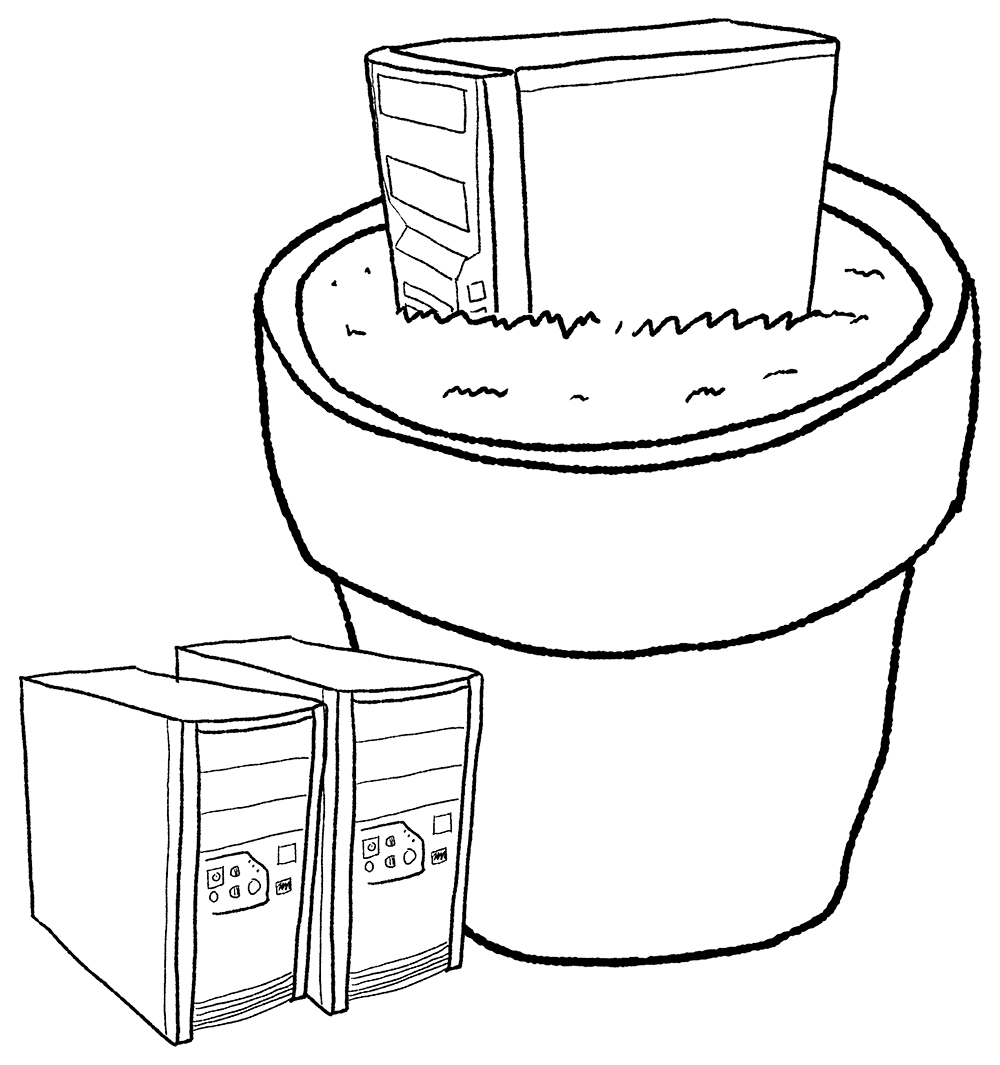

I do like the term sunsetting, but it does imply a sense of finality, shutting down, turning off, etc. This may be the case for some projects but I also like the idea of employing the gardening metaphor of repotting. You repot a plant when it has outgrown its current home but you also do it as part of general maintenance. Regardless of the metaphor the idea is the same, you have a site that was likely the result of a project or grant, it takes some effort to keep going in the current form and you want to reduce that effort. This effort may take the form of your time and energy or it might be wanting to reduce infrastructure or liability so you can grow and take on new projects.

I've recently gone through this process for what I would classify as two digital humanities project sites. I thought it might be helpful to see in detail what that process looks like of repotting them into a more sustainable home.

The fist site is PADB.net. This was an site containing the dataset that I used for writing my history of art master's thesis on the 1960’s Fluxus artist movement. I created this site in 2011 and it a LAMP stack, meaning it is a website running on a Linux box, with the Apache webserver, a MySQL database and written in the PHP programming language. This was a pretty popular setup for developing websites in the early 2010s. The important parts are the MySQL and PHP, meaning there is a database powering interactive dynamic pages. If you want this site to continue to work you have to have a server running the database and PHP code. I didn't want to run this server anymore, the cost, time requirements were not something I wanted to maintain. Because this is a dynamic site I had two options, repackage the server into a new more easily maintainable pot or flatten it, make it not dynamic anymore.

The dynamism that a database allows for is two fold, one is the ability to add new data and the second it to have the presentation of the site be driven by database which allows you to have page templates that are populated by the information in the database. In this case the site is about the people, places and performance of the Fluxus movement. So each one of those categories had a page to represent them. There maybe hundreds of entities in the database but only one PHP page that took that data and generated a unique html page for them.

I decided it was unlikely that any new data would be added to this project. After almost ten years I can realistically say I will probably not be adding more things to it. And even if I did I would likely want to do it differently anyway, new technology, methodology, etc. If I remove that requirement I can then say the database is not needed, all I care about is the presentation layer, the HTML pages themselves. In this case, then what I want to do is turn it into a static site. I will remove the database requirement by creating an acutal page for everything in the database. If every entity in the database has its own page that can be served by a webserver then I don't need the "MP" part of the LAMP.

The hierarchy of the site is pretty simple. There is a main list of all the resources in the database and then each resource has its own page, for example http://padb.net/resource/yoko_ono. With the dynamic site PHP reads the database and then populates the resource template with Yoko Ono's data. I just need to take the resulting HTML page and save it so it can be served without any database or PHP requirement to create it.

To get started I used the simple tool HTTrack. I pointed it at the existing database powered site and it crawled the pages and made a local file system structure. So it made a root padb.net directory, a /resource/ directory and a /yoko_ono/ directory and populated that static HTML page. This works pretty well, except I put some javascript visualizations on the site that are powered by a JSON API. The page loads then makes another request via javascript for more data to populate a d3.js network diagram. HTTrack can only follow HTML links defined in the page. If there is a button that when clicked kicks off a javacsript call then it doesn't know how to do that. So what is needed is to make the API static as well. So when the javascript requests resource/yoko_ono/event/data.json from the site the database builds that JSON file and returns it. I need to make that data.json for each resource so when the JS calls the endpoint it is served the static file and it works exactly as when there was a database powering everything.

This is when things get a little more bespoke. I need to write something adds in these json files to the existing website hierarchy. I did this by writing a python script that requests the json files from the dynamic API and writes them out as static json files in the correct web hierarchy. To accomplish this I just figured out all of my API endpoints, made a big list of all the resources and has python loop through them, requesting each API endpoint and writing out the data file. You can see that python script here.

This is by far the most time consuming part, lots of trial and error to make sure you have everything. My process was to run a local webserver using python by running "python3 -m http.server" on the command line in the root of the file hierarchy. This spins up a webserver and your local files are served on http://localhost:8000. If it works here locally it will work as a static site. During this process I found out one endpoint was not really working properly for some resources, the API would 500 error out. So I decided to just remove that feature, I modified the site javascript file to hide the button that triggered that feature. It was not worth my time to hunt down a decade old bug to fix a minor feature, so I just diabled it. You might find some features that cannot be made static. For example if this site had a search feature I would have had to disable that as well. Something like full text index is not easy to recreate as static files. You have to curate what features can realistically be supported in this new form and be okay with possibly removing some features.

I finally have all the static HTML and JSON that powers the site, removing the "MP" from the LAMP. The last part is to remove the "LA" as well. To do this we just need to host the site somewhere that can serve static content. As I don't want to be running a server, even a server just sending static content I need another hosting provider. I realistically had two options, put the site into an AWS S3 bucket and server it from there or use Github pages to serve it. I went with Github pages because it is free, supports custom domain names, meaning I could keep the PADB.net address and it is kind of nice that it can be revision controlled since it is comprised of static files.

To do this I simply made a new Github repo, cloned it locally and move that site hierarchy into it, git committed and pushed it. I then turned on page hosting in the repo settings and setup the custom domain settings. The result is that PADB.net is now served from github pages as static files but there is really no difference from the user’s perspective.

The next example is more complicated because of the decision that it cannot be made into a static site. Linked Jazz's 52nd Street is a crowdsourcing application that lets users help classify the relationships between jazz musicians based on the content of their oral history interviews. We still wanted the site to work, we may use it in the future with new data but the current way it is being hosted is unsustainable. It is another dated LAMP site, I wrote it about eight years ago. Everything is old, Apache, MySQL and PHP. Upgrading to new versions of Apache and MySQL is not a big deal, you can load SQL data from an old MySQL instance into a new one fairly seamlessly. But trying to run a PHP app written in 5.x with say PHP version 7 is going to cause problems. You could go through and refactor the code but that is a lot of effort.

My solution is to repot this project into a Docker container. I can run the site using an old version of PHP in its own container, it can have its own Apache and MySQL in there with it and it will still be a dynamic LAMP site, just isolated in its own little sandbox. By dockerizing this app I can easily move it around to another server. If something happens and I need to wipe the current Linked Jazz server I don't have to worry about configuring everything so this old LAMP site works. As long as Docker is installed I can run it in its own container and restore it back to normal in a few minutes.

Lucky someone has been maintain Docker images of legacy PHP. Using this as my base image I loaded in the webpage’s php codebase into the Docker container. I had to load the existing database into the Docker MySQL, I used mysqldump to create a backup on the existing server and then used it in the Docker image to load it into the Dockerized MySQL. There were some modifications that had to be made to the codebase like updating the MySQL credentials and fixing some hard coded paths to assets like images and CSS files, etc. But I was shocked when I started it up and it pretty much more or less worked. I also researched some ways to make the Docker LAMP stack a little less resource intensive, since I might have many of these Dockerized apps running all with their own MySQL and Apache DB.

You can see the modifications I made in the config files here

And see the final Docker compose file that lanches the 52nd St app

By repotting this app into a Docker container I did not lose any functionality but I did reduce the effort in maintaining old infrastructure. The app runs in its own container, I can upgrade the real server software without having to worry if doing so will break this old LAMP application. I won't go into details in this post about how the server is configured but the gist is that I use Nginx webserver to do a reverse proxy to the Dockerized apps, so when a request comes to www.linkedjazz.org/52ndStreet Nginx redirects to the localhost the app running on, but it looks like to the user it is still being served seamlessly from linkedjazz.org. This way I can squeeze a lot of legacy things neatly onto one server and maintaining them is much easier.

I have one bonus example, something I really think of sunsetting, rather than repotting. Well maybe repotting into the garbage can. I made a site as a class project in graduate school to explore the possibilities of embedded metadata in digital assets. I had to evaluate the effort in repotting this tool or not. It could not be made into a static site because it is not a content site, you upload a digital asset and it will inform you of the embedded metadata present. I could possibly Dockerize it but it requires a special PHP module that was difficult to get working almost a decade ago, the thought of trying to get it functional today, even with Docker sounded extravagant. So I said farewell little website, you had a good run, someone even used it to write an academic paper but you can't do everything and this is something that can be let go. I made sure to make some videos of the site, do a webcapture of it, put all the source code on Github and redirected the domain to a page with all this information.

It's sad to turn off something you spent time building but you also get the satisfaction of not having to think about it ever again. And maybe that little room that is freed up in your brain is the space needed to make it to the next thing.